Generative AI is changing how businesses work, bringing new ways to improve customer service, sales and productivity. But as AI grows, so do concerns about data security and privacy.

Salesforce’s Einstein Trust Layer addresses these concerns by providing a solid security system that easily fits into the user experience.

It protects sensitive information, allowing businesses to use AI to its full potential without risking the safety or privacy of their data.

What is Einstein Trust Layer?

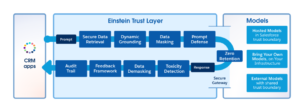

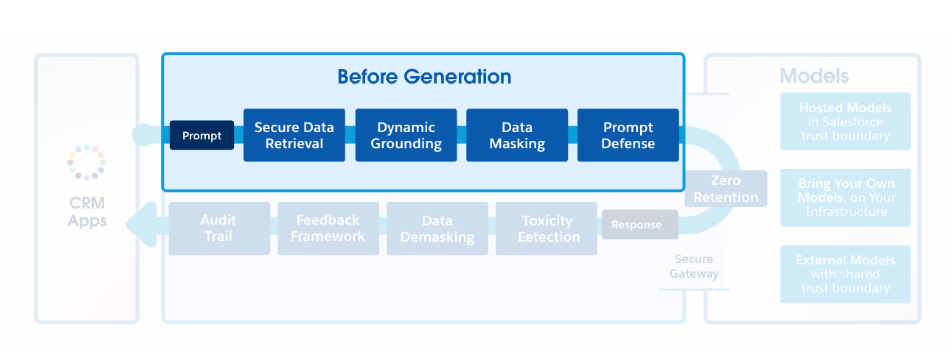

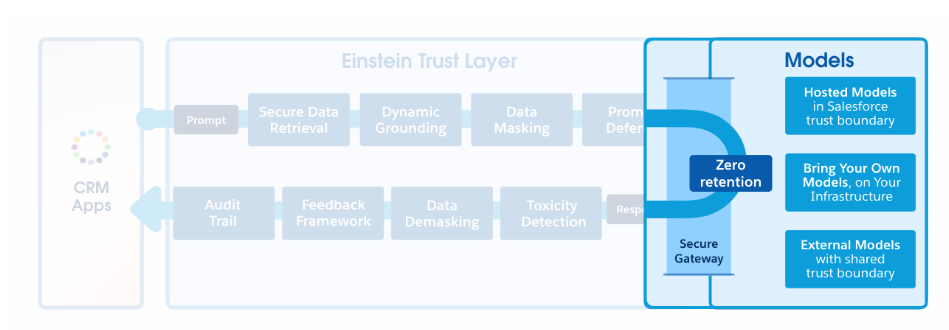

The Einstein Trust Layer is a security system that protects customer and company data while allowing the safe use of generative AI. It uses a series of protective gateways and retrieval methods to secure sensitive information. This ensures that AI-driven processes stay anchored in protected data, minimizing the risk of breaches or misuse.

By integrating essential data protection measures into the AI process, the Trust Layer provides Salesforce users with advanced AI features, such as automated responses and sales forecasting, without sacrificing security.

How does it work?

Dynamic Grounding with Secure Data Retrieval in Salesforce

Dynamic grounding in Salesforce merges relevant record data with prompts, providing context for users. It ensures secure data retrieval, respecting user permissions and field-level security. Only authorized users can access specific information, preserving all Salesforce role-based controls.

Data Masking in Salesforce

Data masking in Salesforce helps protect sensitive information by detecting and hiding it before it’s accessed or shared. This feature supports multiple regions and languages, making it easier for organizations to meet local data protection requirements.

We can choose which data fields to mask, ensuring that sensitive information is concealed while non-critical data remains accessible.

Are you preparing for the Salesforce AI Certifications? Check out the Salesforce certification practice set here

Managing AI Risks with System Policies(Prompt Defense)

System policies are essential for ensuring accuracy and preventing harmful or incorrect outputs in AI-driven systems. In Salesforce, these policies vary depending on the AI feature or use case.

For example, customer service bots may have stricter guidelines to avoid misleading information, while marketing tools might allow more flexibility for creative content.

Zero-Data Retention Policy

Salesforce upholds a strict zero-data retention policy when using third-party large language models (LLMs) like OpenAI and Azure OpenAI. This ensures that:

- No Data Retention: Third-party LLMs do not store customer data after processing.

- No Use for Training: Data is not used for model training or product improvements.

- No Human Access: Third-party provider employees can not access customer data.

Also Read: What is Agentforce in Salesforce?

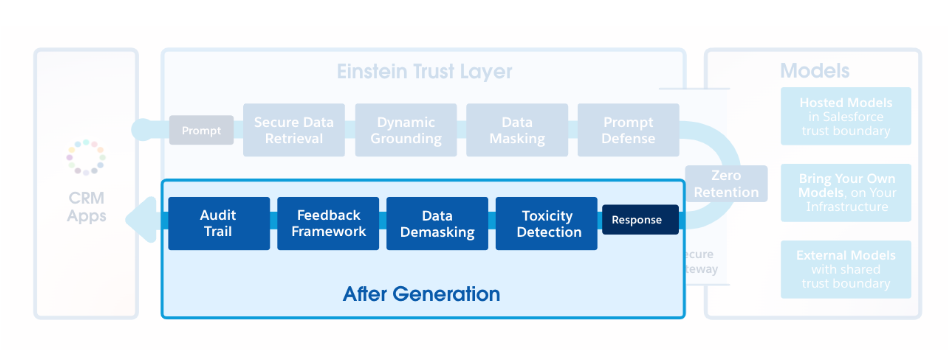

Toxicity Scoring

Salesforce’s Einstein Trust Layer ensures safe communication by assigning content toxicity scores. It evaluates language for harmful elements such as hate speech or harassment, helping organizations monitor and block inappropriate content.

All toxicity scores are logged and stored in the Data Cloud, forming part of the organization’s audit trail. This allows for easy tracking and review of flagged content, supporting transparency and compliance.

Data Demasking

The Trust Layer features data demasking, allowing authorized users to access masked information when needed securely. This protects sensitive data during regular interactions while ensuring appropriate access for legitimate business purposes.

Audit Trails

Every AI prompt is tracked through its journey, ensuring transparency and accountability. This allows businesses to review how data is processed and used, adding a layer of control.

Want to Learn Salesforce Flows? Checkout our Salesforce Flow Course

Integration with Large Language Models (LLMs)

A standout feature of the Einstein Trust Layer is its capability to work with various large language models (LLMs) within and outside of Salesforce. This adaptability allows businesses to securely use AI tools from different providers while ensuring consistent protection. Whether companies generate sales emails or summarize work tasks, the Trust Layer keeps data safe throughout the process.

FAQs

1. Can organizations customize their security settings within the Einstein Trust Layer?

Yes, organizations can customize their security settings within the Einstein Trust Layer. They can mask specific data fields, set up system policies according to their use cases, and define user permissions to effectively control access to sensitive information.

2. Is the Einstein Trust Layer compatible with third-party AI tools?

Yes, the Einstein Trust Layer is designed to work with various third-party large language models (LLMs). This compatibility allows businesses to securely utilize AI tools from different providers while maintaining consistent data protection across their operations.

Conclusion

Salesforce’s Einstein Trust Layer sets a new benchmark for data security in the era of generative AI. By offering strong protections and ensuring compliance, it enables businesses to leverage AI technologies while safeguarding data privacy. As organizations explore AI’s potential, adopting solutions like the Einstein Trust Layer will be vital for navigating data security challenges.